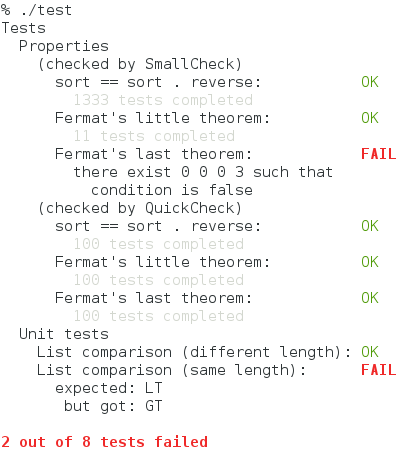

It lets you combine your unit tests, golden tests, QuickCheck/SmallCheck

properties, and any other types of tests into a single test suite.

(Note that whether QuickCheck finds a counterexample to the third property is

determined by chance.)

In order to create a test suite, you also need to install one or more «providers» (see

below).

Ingredients represent different actions that you can perform on your test suite.

One obvious ingredient that you want to include is one that runs tests and

reports the progress and results.

Another standard ingredient is one that simply prints the names of all tests.

When using the standard console runner, the options can be passed on the

command line or via environment variables. To see the available options, run

your test suite with the --help flag. The output will look something like this

(depending on which ingredients and providers the test suite uses):

Every option can be passed via environment. To obtain the environment variable

name from the option name, replace hyphens - with underscores _, capitalize

all letters, and prepend TASTY_. For example, the environment equivalent of

--smallcheck-depth is TASTY_SMALLCHECK_DEPTH.

Note on boolean options: by convention, boolean ("on/off") options are specified

using a switch on the command line, for example --quickcheck-show-replay

instead of --quickcheck-show-replay=true. However, when

passed via the environment, the option value needs to be True or False

(case-insensitive), e.g. TASTY_QUICKCHECK_SHOW_REPLAY=true.

If you're using a non-console runner, please refer to its documentation to find

out how to configure options during the run time.

import Test.Tasty

import System.Environment

main = do

setEnv "TASTY_NUM_THREADS" "1"

defaultMain _

Patterns

It is possible to restrict the set of executed tests using the -p/--pattern

option.

Tasty patterns are very powerful, but if you just want to quickly run tests containing foo

somewhere in their name or in the name of an enclosing test group, you can just

pass -p foo. If you need more power, or if that didn't work as expected, read

on.

A pattern is an awk expression. When the expression is evaluated, the field $1

is set to the outermost test group name, $2 is set to the next test group

name, and so on up to $NF, which is set to the test's own name. The field $0

is set to all other fields concatenated using . as a separator.

As an example, consider a test inside two test groups:

testGroup "One" [ testGroup "Two" [ testCase "Three" _ ] ]

When a pattern is evaluated for the above test case, the available fields and variables are:

$0 = "One.Two.Three"

$1 = "One"

$2 = "Two"

$3 = "Three"

NF = 3

Here are some examples of awk expressions accepted as patterns:

$2 == "Two" — select the subgroup Two

$2 == "Two" && $3 == "Three" — select the test or subgroup named Three in the subgroup named Two

$2 == "Two" || $2 == "Twenty-two" — select two subgroups

$0 !~ /skip/ or ! /skip/ — select tests whose full names (including group names) do not contain the word skip

$NF !~ /skip/ — select tests whose own names (but not group names) do not contain the word skip

$(NF-1) ~ /QuickCheck/ — select tests whose immediate parent group name

contains QuickCheck

As an extension to the awk expression language, if a pattern pat contains only

letters, digits, and characters from the set ._ - (period, underscore, space, hyphen),

it is treated like /pat/ (and therefore matched against $0).

This is so that we can use -p foo as a shortcut for -p /foo/.

The only deviation from awk that you will likely notice is that Tasty

does not implement regular expression matching.

Instead, $1 ~ /foo/ means that the string foo occurs somewhere in $1,

case-sensitively. We want to avoid a heavy dependency of regex-tdfa or

similar libraries; however, if there is demand, regular expression support could

be added under a cabal flag.

The following operators are supported (in the order of decreasing precedence):

|

Syntax

|

Name

|

Type of Result

|

Associativity

|

|

(expr)

|

Grouping

|

Type of expr

|

N/A

|

|

$expr

|

Field reference

|

String

|

N/A

|

|

!expr

-expr

|

Logical not

Unary minus

|

Numeric

Numeric

|

N/A

N/A

|

|

expr + expr

expr - expr

|

Addition

Subtraction

|

Numeric

Numeric

|

Left

Left

|

|

expr expr

|

String concatenation

|

String

|

Right

|

|

expr < expr

expr <= expr

expr != expr

expr == expr

expr > expr

expr >= expr

|

Less than

Less than or equal to

Not equal to

Equal to

Greater than

Greater than or equal to

|

Numeric

Numeric

Numeric

Numeric

Numeric

Numeric

|

None

None

None

None

None

None

|

|

expr ~ pat

expr !~ pat

(pat must be a literal, not an expression, e.g. /foo/)

|

Substring match

No substring match

|

Numeric

Numeric

|

None

None

|

|

expr && expr

|

Logical AND

|

Numeric

|

Left

|

|

expr || expr

|

Logical OR

|

Numeric

|

Left

|

|

expr1 ? expr2 : expr3

|

Conditional expression

|

Type of selected

expr2 or expr3

|

Right

|

The following built-in functions are supported:

substr(s, m[, n])

Return the at most n-character substring of s that begins at

position m, numbering from 1. If n is omitted, or if n specifies

more characters than are left in the string, the length of the substring

will be limited by the length of the string s.

tolower(s)

Convert the string s to lower case.

toupper(s)

Convert the string s to upper case.

match(s, pat)

Return the position, in characters, numbering from 1, in string s where the

pattern pat occurs, or zero if it does not occur at all.

pat must be a literal, not an expression, e.g. /foo/.

length([s])

Return the length, in characters, of its argument taken as a string, or of the whole record, $0, if there is no argument.

Running tests in parallel

In order to run tests in parallel, you have to do the following:

- Compile (or, more precisely, link) your test program with the

-threaded

flag;

- Launch the program with

+RTS -N -RTS.

Timeout

To apply timeout to individual tests, use the --timeout (or -t) command-line

option, or set the option in your test suite using the mkTimeout function.

Timeouts can be fractional, and can be optionally followed by a suffix ms

(milliseconds), s (seconds), m (minutes), or h (hours). When there's no

suffix, seconds are assumed.

Example:

./test --timeout=0.5m

sets a 30 seconds timeout for each individual test.

Options controlling console output

The following options control behavior of the standard console interface:

-q,--quiet-

Run the tests but don't output anything. The result is indicated only by the

exit code, which is 1 if at least one test has failed, and 0 if all tests

have passed. Execution stops when the first failure is detected, so not all

tests are necessarily run.

This may be useful for various batch systems, such as commit hooks.

--hide-successes- Report only the tests that has failed. Especially useful when the

number of tests is large.

-l,--list-tests- Don't run the tests; only list their names, in the format accepted by

--pattern.

--color- Whether to produce colorful output. Accepted values:

never,

always, auto. auto means that colors will

only be enabled when output goes to a terminal and is the default value.

Custom options

It is possible to add custom options, too.

To do that,

- Define a datatype to represent the option, and make it an instance of

IsOption

- Register the options with the

includingOptions ingredient

- To query the option value, use

askOption.

See the Custom options in Tasty article for some examples.

Project organization and integration with Cabal

There may be several ways to organize your project. What follows is not

Tasty's requirements but my recommendations.

Tests for a library

Place your test suite sources in a dedicated subdirectory (called tests

here) instead of putting them among the main library sources.

The directory structure will be as follows:

my-project/

my-project.cabal

src/

...

tests/

test.hs

Mod1.hs

Mod2.hs

...

test.hs is where your main function is defined. The tests may be

contained in test.hs or spread across multiple modules (Mod1.hs, Mod2.hs,

...) which are then imported by test.hs.

Add the following section to the cabal file (my-project.cabal):

test-suite test

default-language:

Haskell2010

type:

exitcode-stdio-1.0

hs-source-dirs:

tests

main-is:

test.hs

build-depends:

base >= 4 && < 5

, tasty >= 0.7 -- insert the current version here

, my-project -- depend on the library we're testing

, ...

Tests for a program

All the above applies, except you can't depend on the library if there's no

library. You have two options:

- Re-organize the project into a library and a program, so that both the

program and the test suite depend on this new library. The library can be

declared in the same cabal file.

- Add your program sources directory to the

Hs-source-dirs. Note that this

will lead to double compilation (once for the program and once for the test

suite).

Dependencies

Tasty executes tests in parallel to make them finish faster.

If this parallelism is not desirable, you can declare dependencies between

tests, so that one test will not start until certain other tests finish.

Dependencies are declared using the after combinator:

after AllFinish "pattern" my_tests will execute the test tree my_tests only after all

tests that match the pattern finish.

after AllSucceed "pattern" my_tests will execute the test tree my_tests only after all

tests that match the pattern finish and only if they all succeed. If at

least one dependency fails, then my_tests will be skipped.

The relevant types are:

after

:: DependencyType -- ^ whether to run the tests even if some of the dependencies fail

-> String -- ^ the pattern

-> TestTree -- ^ the subtree that depends on other tests

-> TestTree -- ^ the subtree annotated with dependency information

data DependencyType = AllSucceed | AllFinish

The pattern follows the same AWK-like syntax and semantics as described in

Patterns. There is also a variant named after_ that accepts the

AST of the pattern instead of a textual representation.

Let's consider some typical examples. (A note about terminology: here

by "resource" I mean anything stateful and external to the test: it could be a file,

a database record, or even a value stored in an IORef that's shared among

tests. The resource may or may not be managed by withResource.)

-

Two tests, Test A and Test B, access the same shared resource and cannot be

run concurrently. To achieve this, make Test A a dependency of Test B:

testGroup "Tests accessing the same resource"

[ testCase "Test A" $ ...

, after AllFinish "Test A" $

testCase "Test B" $ ...

]

-

Test A creates a resource and Test B uses that resource. Like above, we make

Test A a dependency of Test B, except now we don't want to run Test B if Test

A failed because the resource may not have been set up properly. So we use

AllSucceed instead of AllFinish

testGroup "Tests creating and using a resource"

[ testCase "Test A" $ ...

, after AllSucceed "Test A" $

testCase "Test B" $ ...

]

Here are some caveats to keep in mind regarding dependencies in Tasty:

-

If Test B depends on Test A, remember that either of them may be filtered out

using the --pattern option. Collecting the dependency info happens after

filtering. Therefore, if Test A is filtered out, Test B will run

unconditionally, and if Test B is filtered out, it simply won't run.

-

Tasty does not currently check whether the pattern in a dependency matches

anything at all, so make sure your patterns are correct and do not contain

typos. Fortunately, misspecified dependencies usually lead to test failures

and so can be detected that way.

-

Dependencies shouldn't form a cycle, otherwise Tasty with fail with the

message "Test dependencies form a loop." A common cause of this is a test

matching its own dependency pattern.

-

Using dependencies may introduce quadratic complexity. Specifically,

resolving dependencies is O(number_of_tests × number_of_dependencies),

since each pattern has to be matched against each test name. As a guideline,

if you have up to 1000 tests, the overhead will be negligible, but if you

have thousands of tests or more, then you probably shouldn't have more than a

few dependencies.

Additionally, it is recommended that the dependencies follow the

natural order of tests, i.e. that the later tests in the test tree depend on

the earlier ones and not vice versa. If the execution order mandated by the

dependencies is sufficiently different from the natural order of tests in the

test tree, searching for the next test to execute may also have an

overhead quadratic in the number of tests.

FAQ

-

Q: When my tests write to stdout/stderr, the output is garbled. Why is that and

what do I do?

A: It is not recommended that you print anything to the console when using the

console test reporter (which is the default one).

See #103 for the

discussion.

Some ideas on how to work around this:

- Use testCaseSteps (for tasty-hunit only).

- Use a test reporter that does not print to the console (like tasty-ant-xml).

- Write your output to files instead.

-

Q: Why doesn't the --hide-successes option work properly? The test headings

show up and/or the output appears garbled.

A: This can happen sometimes when the terminal is narrower than the

output. A workaround is to disable ANSI tricks: pass --ansi-tricks=false

on the command line or set TASTY_ANSI_TRICKS=false in the environment.

See issue #152.

Press

Blog posts and other publications related to tasty. If you wrote or just found

something not mentioned here, send a pull request!

GHC version support policy

We only support the GHC/base versions from the last 5 years.

Maintainers

Roman Cheplyaka is the primary maintainer.

Oliver Charles is the backup maintainer. Please

get in touch with him if the primary maintainer cannot be reached.