inf-backprop: Automatic differentiation and backpropagation.

Automatic differentiation library with efficient reverse-mode backpropagation for Haskell.

This package provides a general-purpose automatic differentiation system designed for building strongly typed deep learning frameworks. It offers:

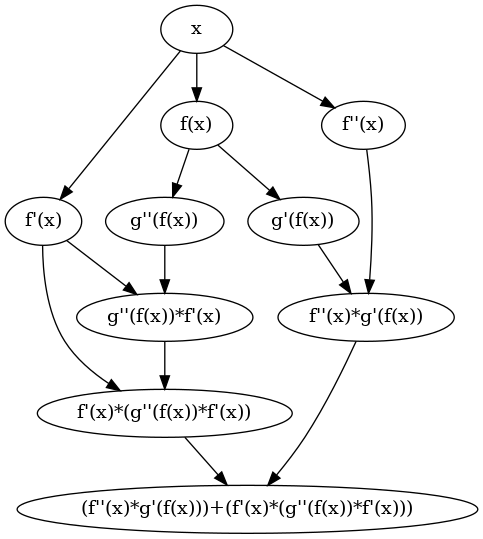

Reverse-mode automatic differentiation (backpropagation)

Support for higher-order derivatives

Type-safe gradient computation

Integration with numhask

The library emphasizes composability and type safety, making it suitable for research, prototyping neural networks, and implementing custom differentiable algorithms.

See the tutorial for detailed examples and usage patterns.

Similar Projects:

Modules

[Index] [Quick Jump]

Downloads

- inf-backprop-0.2.0.2.tar.gz [browse] (Cabal source package)

- Package description (revised from the package)

Note: This package has metadata revisions in the cabal description newer than included in the tarball. To unpack the package including the revisions, use 'cabal get'.

Maintainer's Corner

For package maintainers and hackage trustees

Candidates

| Versions [RSS] | 0.1.0.0, 0.1.0.1, 0.1.0.2, 0.1.1.0, 0.2.0.0, 0.2.0.1, 0.2.0.2 |

|---|---|

| Change log | CHANGELOG.md |

| Dependencies | base (>=4.7 && <5), combinatorial (<0.2), comonad (<5.1), composition (<1.1), data-fix (<0.4), deepseq (<1.6), extra (<1.9), finite-typelits (<0.3), fixed-vector (<2.2), ghc-prim (<0.14), hashable (<1.6), indexed-list-literals (<0.3), isomorphism-class (<0.4), lens (<5.4), numhask (<0.14), optics (<0.5), primitive (<0.10), profunctors (<5.7), safe (<0.4), simple-expr (>=0.2 && <0.3), Stream (<0.5), text (<2.2), transformers (<0.7), unordered-containers (<0.3), vector (<0.14), vector-sized (<1.7) [details] |

| Tested with | ghc ==9.8.4, ghc ==9.10.3 |

| License | BSD-3-Clause |

| Copyright | 2023-2025 Alexey Tochin |

| Author | Alexey Tochin |

| Maintainer | Alexey.Tochin@gmail.com |

| Uploaded | by AlexeyTochin at 2025-11-23T12:29:57Z |

| Revised | Revision 1 made by AlexeyTochin at 2026-02-07T10:40:04Z |

| Category | Mathematics |

| Distributions | LTSHaskell:0.1.1.0, NixOS:0.1.1.0, Stackage:0.2.0.2 |

| Downloads | 438 total (19 in the last 30 days) |

| Rating | 2.0 (votes: 1) [estimated by Bayesian average] |

| Your Rating | |

| Status | Docs uploaded by user Build status unknown [no reports yet] |